OpenAI has come under fresh scrutiny after it significantly reduced the time and resources allocated to safety testing on its state-of-the-art artificial intelligence models, a move that has alarmed some testers and industry experts alike. In a series of measures that have shortened safety evaluation periods from several months to just days, internal staff and select third-party evaluators have voiced concerns that critical safeguards are being sidelined in a frantic race to release new technologies. Critics argue that these accelerated timelines are driven by competitive pressures as the company strives to maintain its edge in an increasingly crowded market—a race that pits OpenAI against major tech giants and emerging start-ups eager to claim their share of the AI revolution.

In-depth sources familiar with the situation reveal that the shortened evaluation timeframe now granted to these assessments has considerably diminished the rigor of the testing process. Formerly, some models had up to six months of thorough review before public release, during which vulnerabilities were meticulously identified and addressed. However, with the latest models now undergoing evaluations in less than a week, one tester lamented, "We had more thorough safety testing when the technology was less critical, which now seems to be sacrificed in the interest of speed." This reduction in testing time not only raises the risk of potentially dangerous flaws slipping through the cracks but also amplifies concerns over the possible weaponization of the models—a risk that has been noted especially as these language models grow in capability.

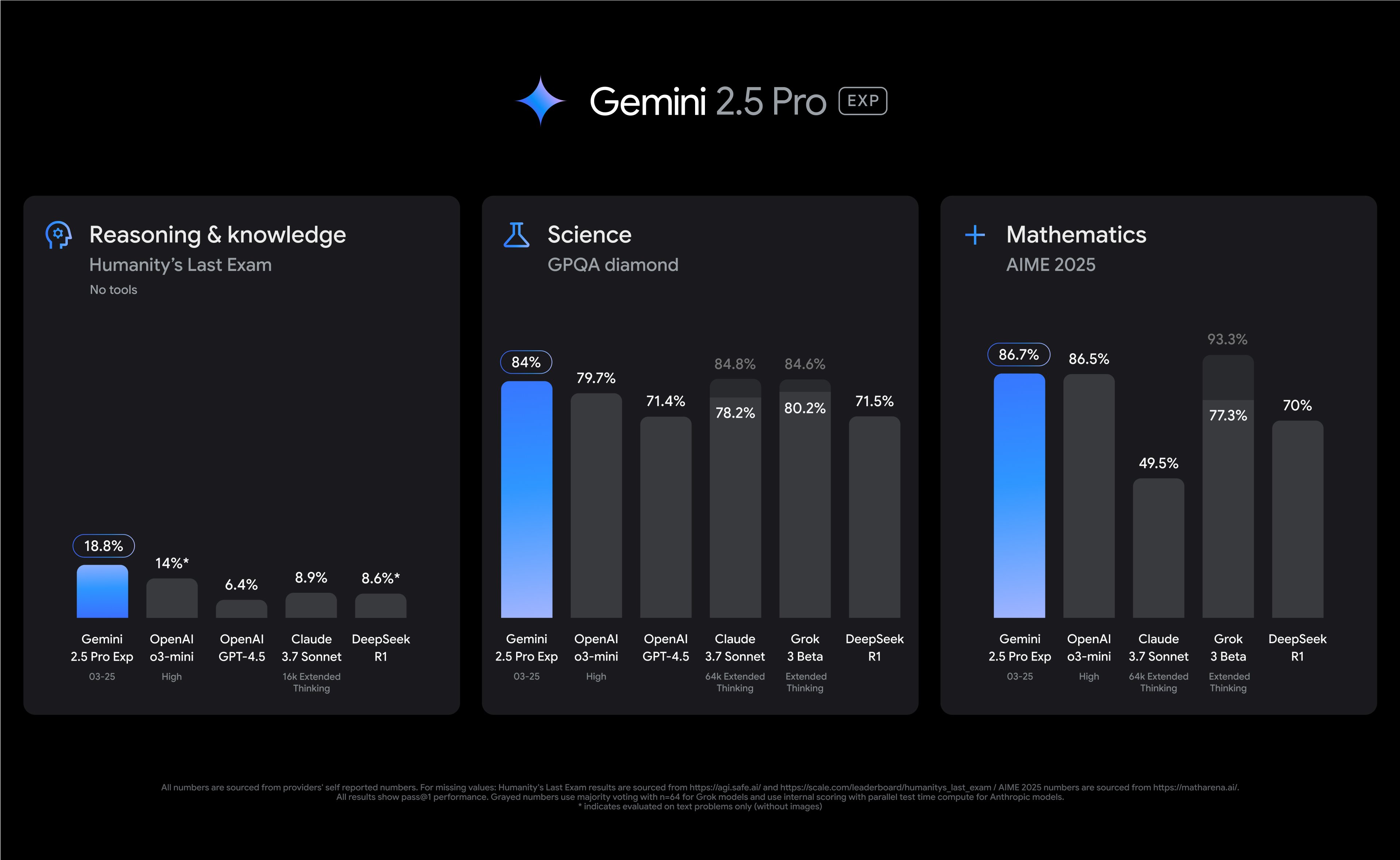

The pressure to expedite releases is reportedly fueled by an increasingly fierce competitive landscape. With peers like Meta, Google, and even Elon Musk’s xAI charging ahead, OpenAI appears determined to secure market share by being the first to unveil its latest innovations. Nonetheless, this drive for rapid advancement has led some to question whether the company is adequately prioritizing public safety. Critics assert that the commitment to swift rollouts may undermine comprehensive risk assessments, thereby endangering not just investors and users, but also the broader ecosystem of AI development. "There’s a real danger here of underestimating the worst-case scenarios," remarked Daniel Kokotajlo, a former OpenAI researcher and current leader of the non-profit AI Futures Project. Kokotajlo emphasized that, without stringent safety testing protocols, the public remains in the dark about the inherent risks—a fact that only heightens skepticism regarding industry practices.

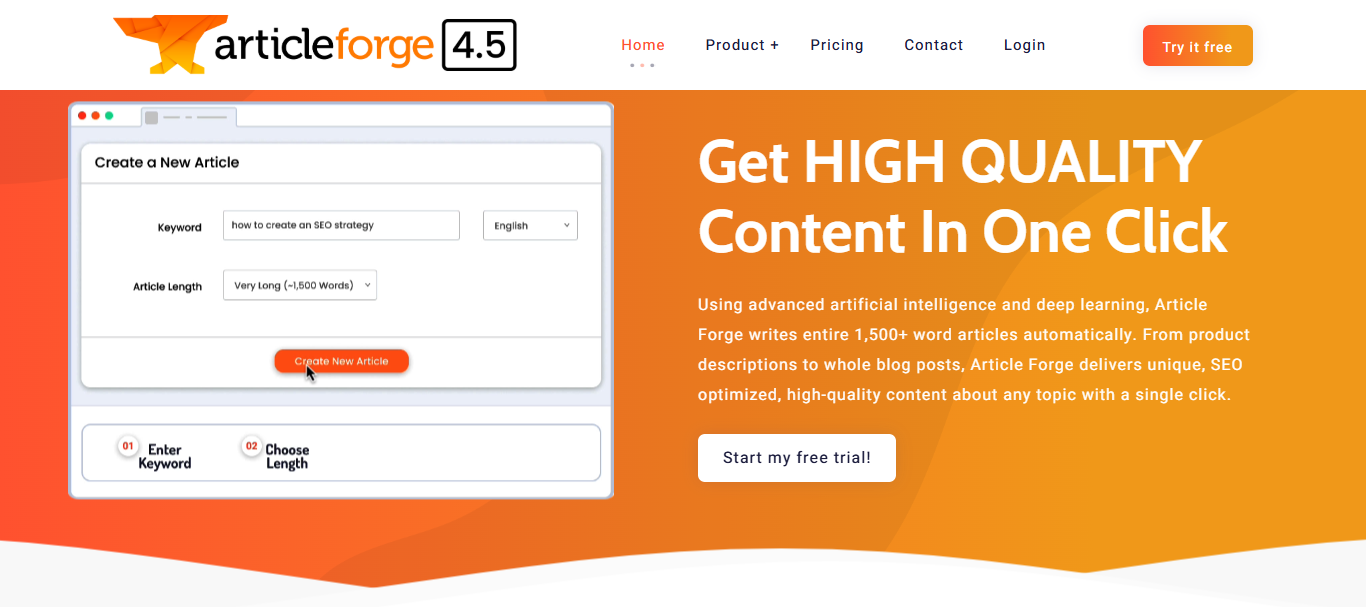

Check this out:

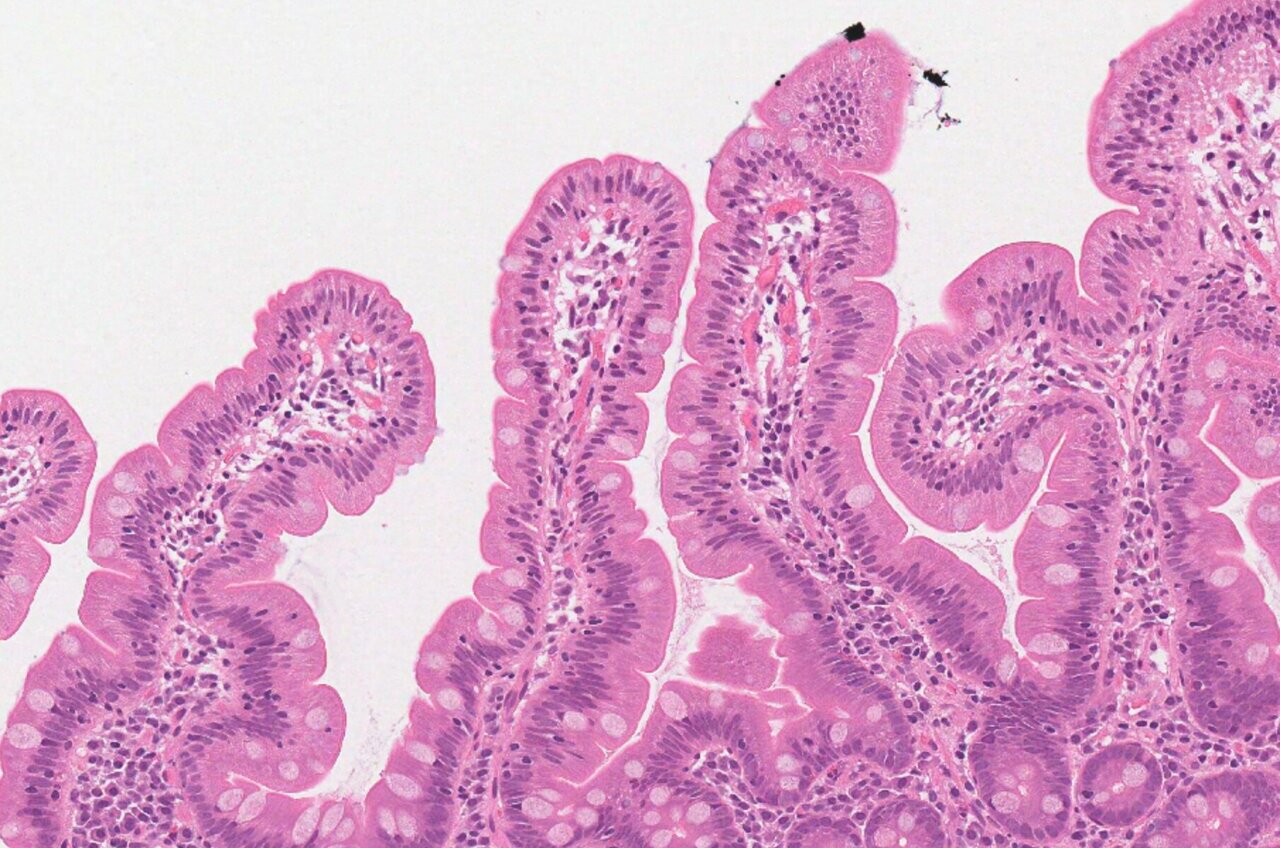

Adding to the concern is OpenAI’s current approach to fine-tuning its models for highly specialized tasks, such as assessing capabilities that could have catastrophic consequences in the wrong hands. While the company has previously committed significant resources to build tailored data sets for issues like enhanced pathogen transmissibility, recent reports suggest that the same level of commitment is not being extended to its latest, more advanced models. Instead, older and less capable systems have received the bulk of the meticulous evaluations, leaving newer technologies without the same depth of scrutiny. Steven Adler, a former OpenAI safety researcher, criticized this strategy, arguing, “It is great that OpenAI set a high bar by committing to customized safety tests, but if it is not following through on this commitment consistently, the public deserves to know.”

In response to the mounting criticism, OpenAI has defended its current approach by claiming that improvements in automated testing and evaluation processes have allowed the company to achieve efficiencies without compromising on safety. Company representatives maintain that no single formula exists for testing such advanced technologies and that their methods, as disclosed in recent safety reports, represent the best possible practice under current industry conditions. They argue that, at least for issues with potentially catastrophic risks, exhaustive testing and appropriate safeguards have not been compromised.

As the debate over the balance between rapid innovation and robust safety measures continues to heat up, both industry insiders and external watchdogs are calling for clearer, standardized benchmarks for AI safety. With the forthcoming EU AI Act set to enforce mandatory safety tests on the most powerful models later this year, the global conversation about responsible AI development is evolving rapidly. For now, OpenAI’s recent adjustments in its evaluation timeline remain a point of contentious debate, highlighting a fundamental challenge facing the industry: how to keep pace with innovation while ensuring that new breakthroughs do not come at the expense of public safety.